Basic Statistics in Sociological Research Important Questions

Here are 10 important questions that cover the key concepts from all the units you've studied so far. These questions will help you prepare for your exams, focusing on both theoretical understanding and practical application:

### **Unit I: Key Statistical Concepts**

1. **Explain the differences between univariate, bivariate, and multivariate data. Provide examples of how each type can be used in sociological research.**

- This question tests your understanding of different data types and their applications.

2. **Discuss the importance of summarizing data through measures of central tendency and measures of dispersion. How do mean, median, mode, range, variance, and standard deviation help in sociological analysis?**

- This will require you to explain the significance of these statistical measures and how they are applied.

3. **Compare and contrast cross-sectional, cohort, and panel data. In what situations would each type be used in sociological research?**

- This question focuses on different research designs and when to use each.

---

### **Unit II: Analysis of Nominal-scale Data**

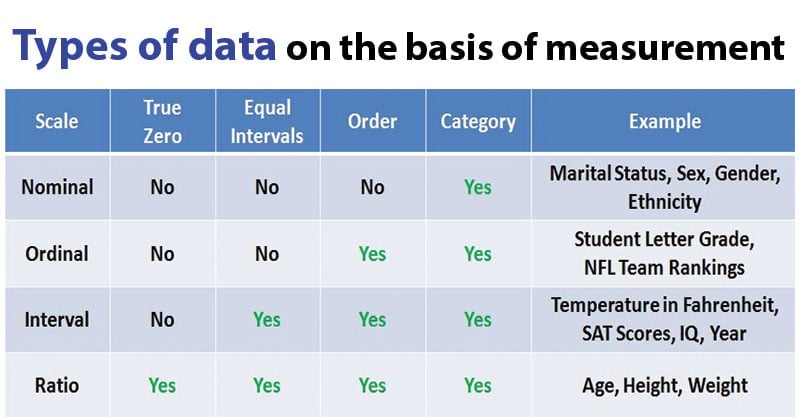

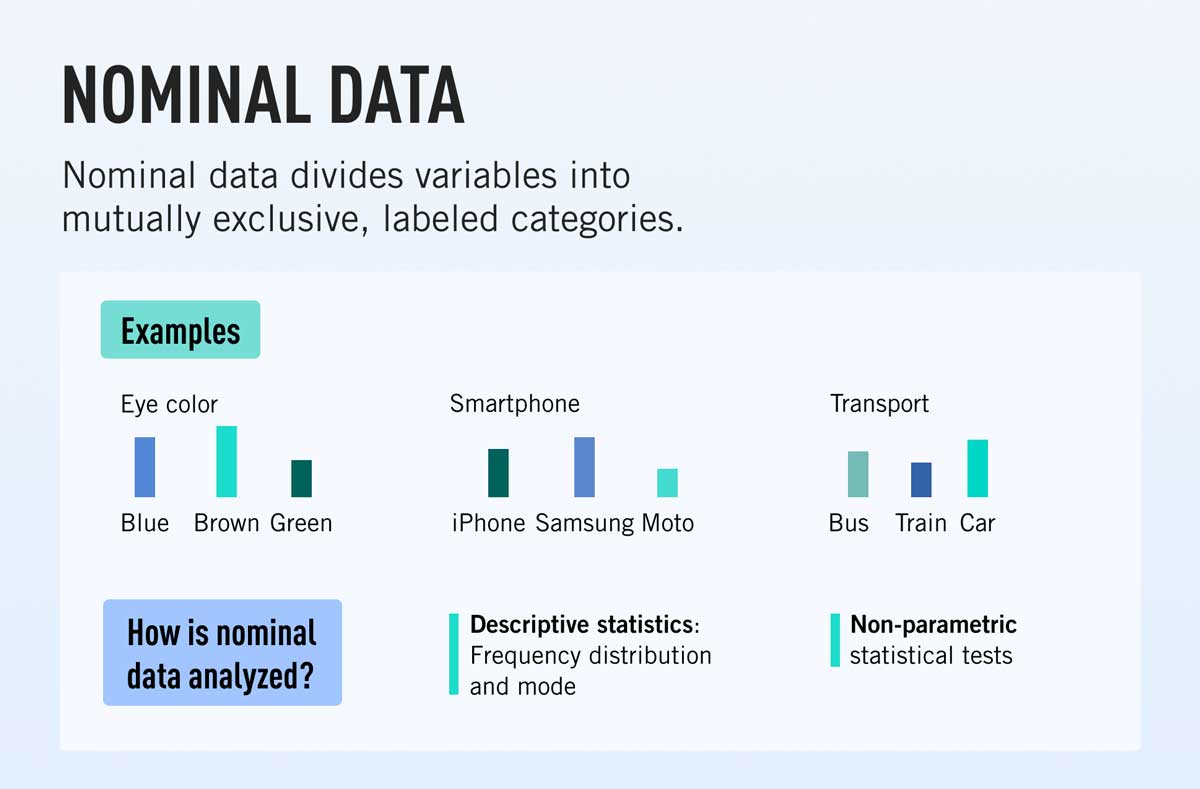

4. **What is the rationale for analyzing nominal-scale data? How are proportions, percentages, and ratios used in nominal-scale analysis?**

- You need to explain the reasoning behind nominal-scale data analysis and its practical application.

5. **Explain how the chi-square test is used in bivariate analysis of nominal-scale data. What is the role of the level of significance in this analysis?**

- This will test your understanding of the chi-square test and significance levels in sociological research.

---

### **Unit III: Analysis of Ordinal-scale Data**

6. **Discuss the rationale for analyzing ordinal-scale data. How do you interpret the results of a rank correlation coefficient?**

- This question focuses on the rationale for ordinal data analysis and the interpretation of rank correlation.

---

### **Unit IV: Analysis of Interval- and Ratio-scale Data**

7. **What is the difference between a one-sample Z test, t-test, and F test? In what research situations would you use each?**

- This question tests your knowledge of the different tests for interval and ratio data and their applications.

8. **Explain the concept of a scatter diagram and correlation coefficient. How would you interpret a Pearson's correlation coefficient in a sociological study?**

- This requires you to explain and apply the concept of correlation to real-world sociological research.

---

### **Unit V: Analysis of Variance (ANOVA)**

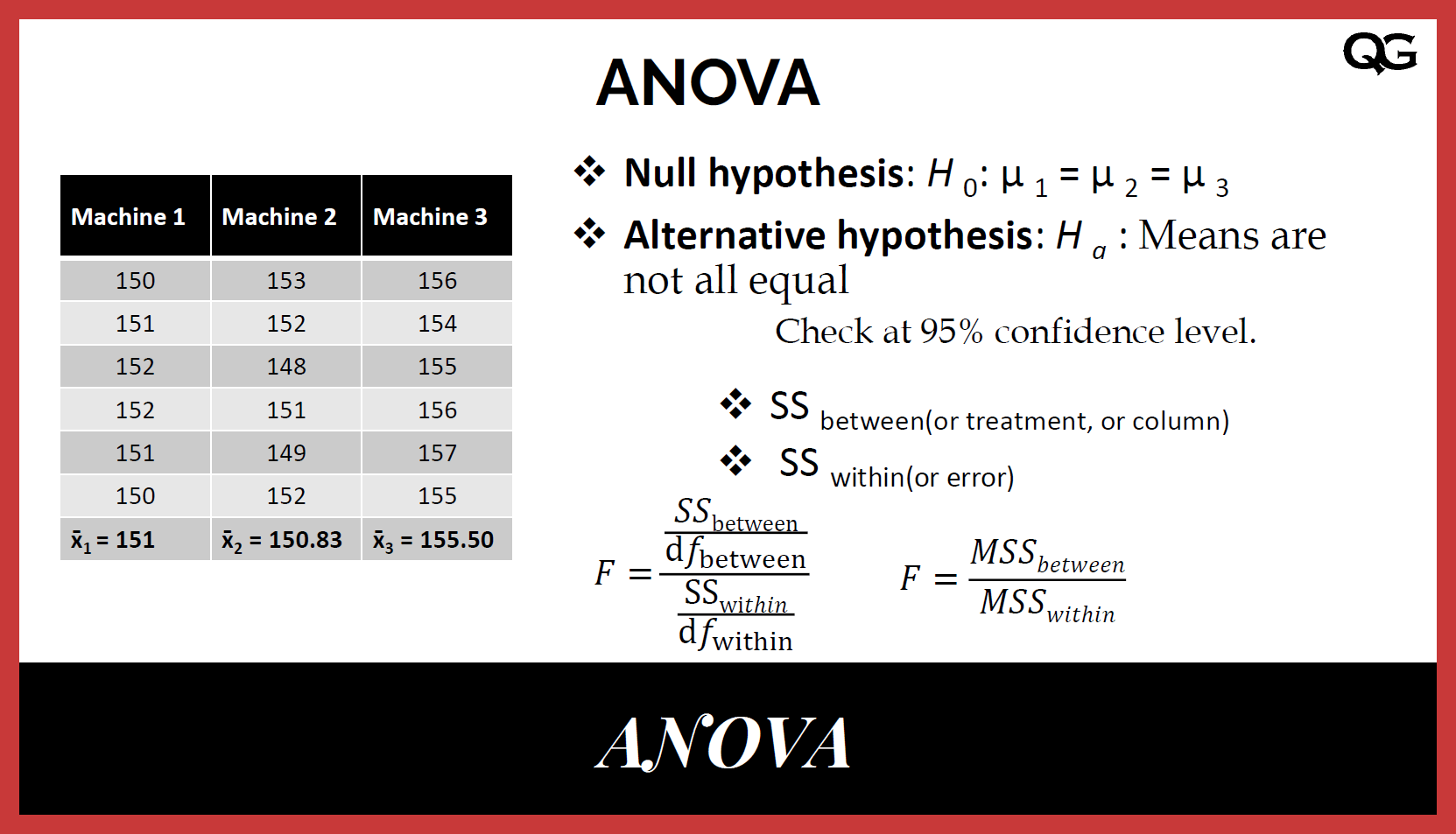

9. **What is the logic behind Analysis of Variance (ANOVA)? How does ANOVA differ from a t-test, and when would you use ANOVA in sociological research?**

- This question will assess your understanding of the fundamental logic of ANOVA and its comparison to other statistical tests.

10. **After conducting ANOVA, why is it necessary to perform multiple comparison tests? Describe Tukey's HSD and how it is used to interpret the results of ANOVA.**

- This will test your understanding of post-hoc tests and their importance after ANOVA.

---

These questions cover a wide range of statistical concepts, including data types, analysis techniques, and interpretation, all essential for your sociology exams. Let me know if you need any additional explanations or practice questions!