Analysis of Variance (ANOVA)

### Unit V: Analysis of Variance (ANOVA)

#### A. **The Logic of Analysis of Variance**

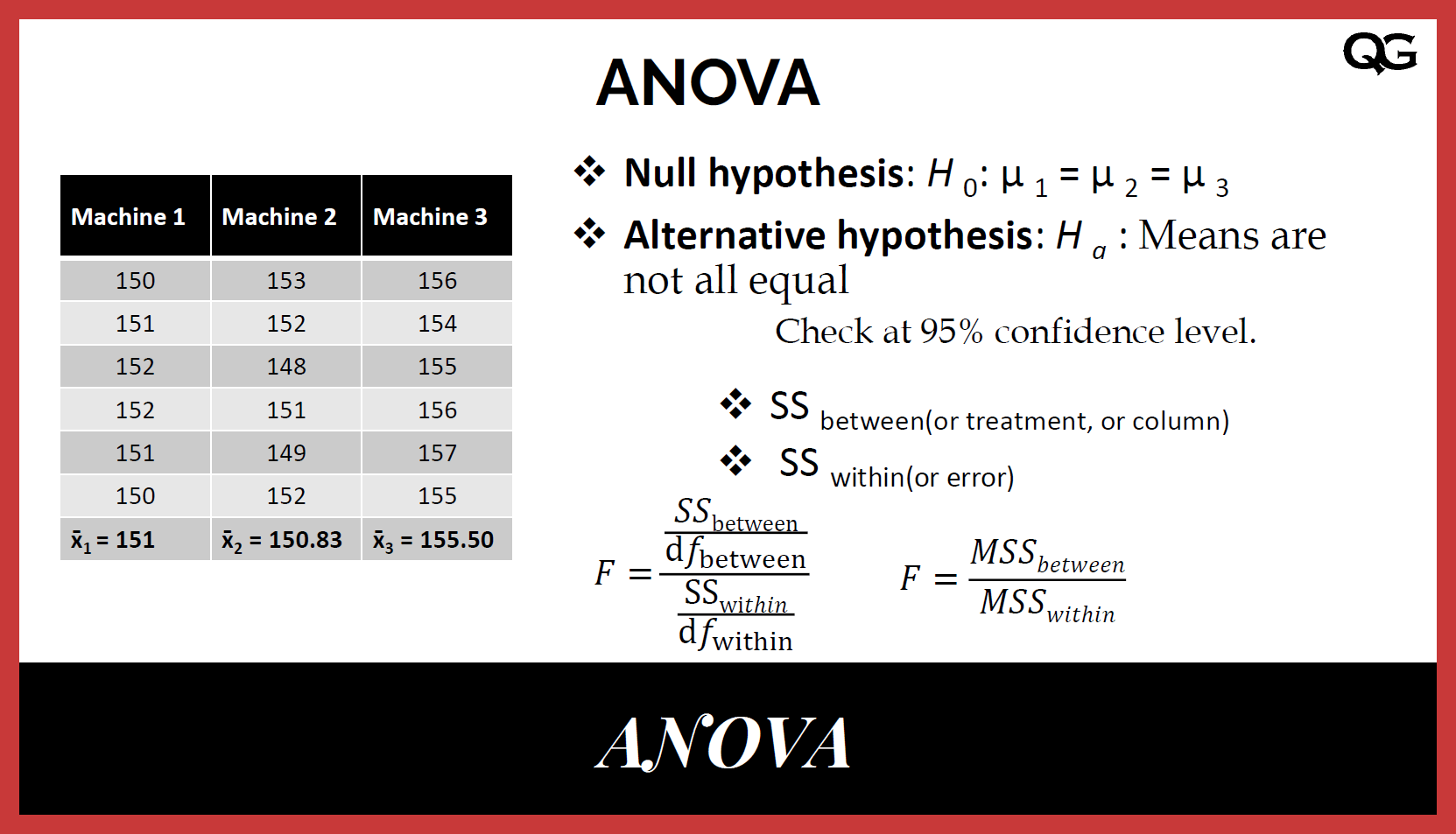

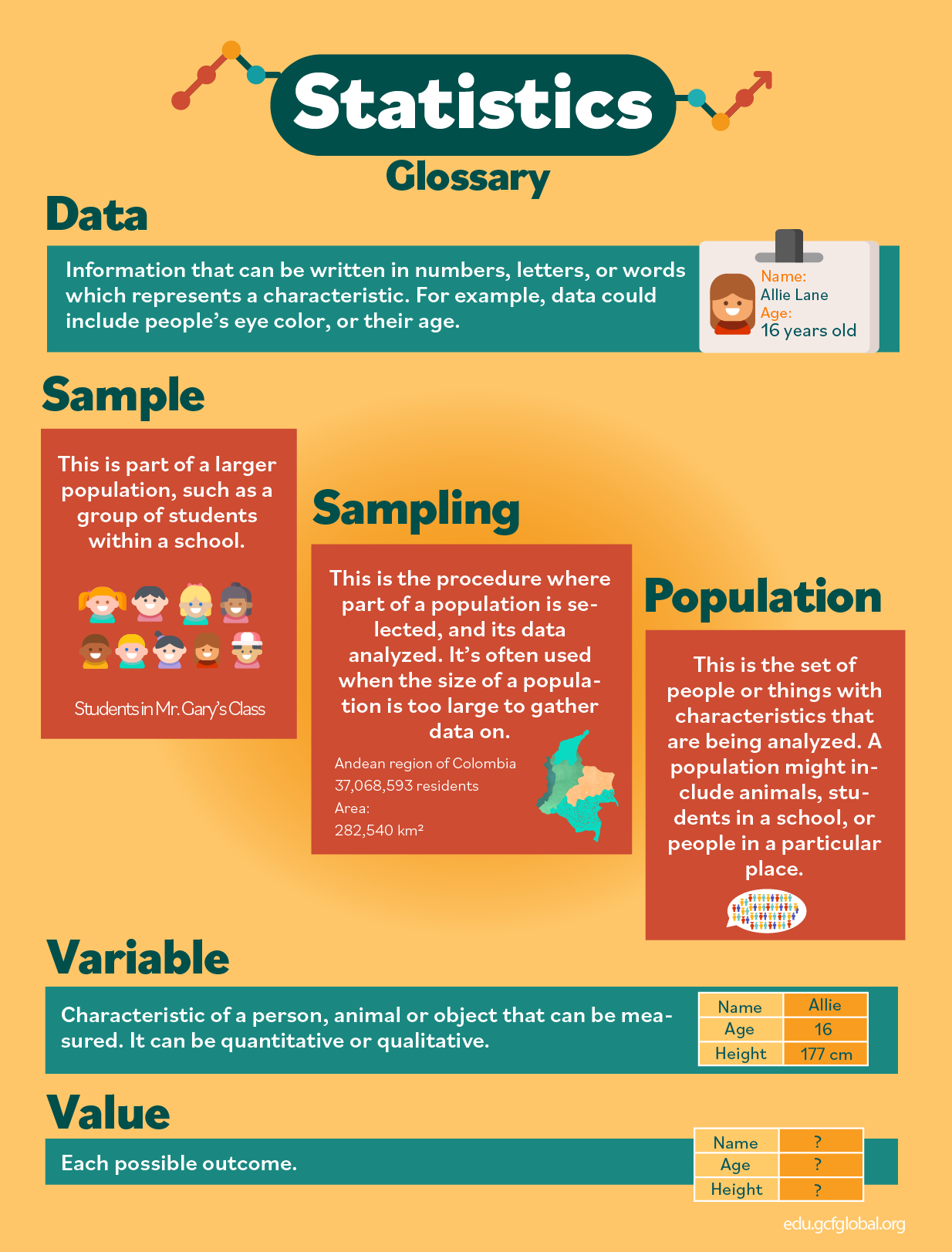

Analysis of Variance (ANOVA) is a statistical technique used to determine whether there are significant differences between the means of three or more groups. The key logic behind ANOVA is to test the hypothesis that all group means are equal, versus the alternative hypothesis that at least one group mean is different.

ANOVA compares the variance within each group to the variance between the groups:

- **Within-group variance** measures how much individuals in the same group differ from the group mean.

- **Between-group variance** measures how much the group means differ from the overall mean.

If the between-group variance is significantly larger than the within-group variance, it suggests that the groups are not all the same, leading to the rejection of the null hypothesis.

The F-ratio is used in ANOVA to compare these variances:

\[

F = \frac{\text{Between-group variance}}{\text{Within-group variance}}

\]

If the F-ratio is large, it suggests that there is a significant difference between group means.

---

#### B. **Analysis of Variance**

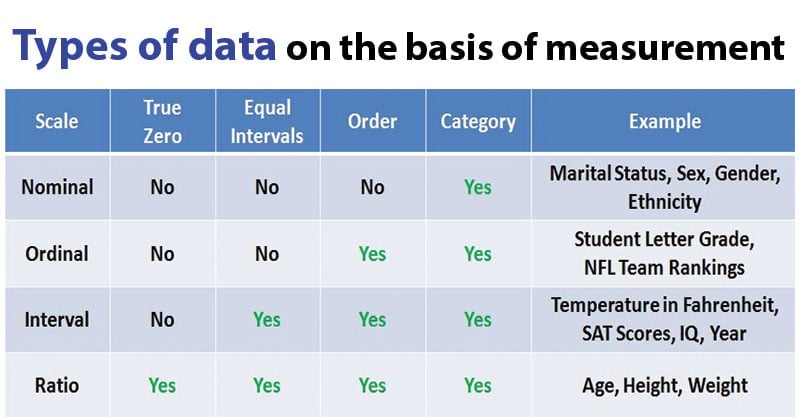

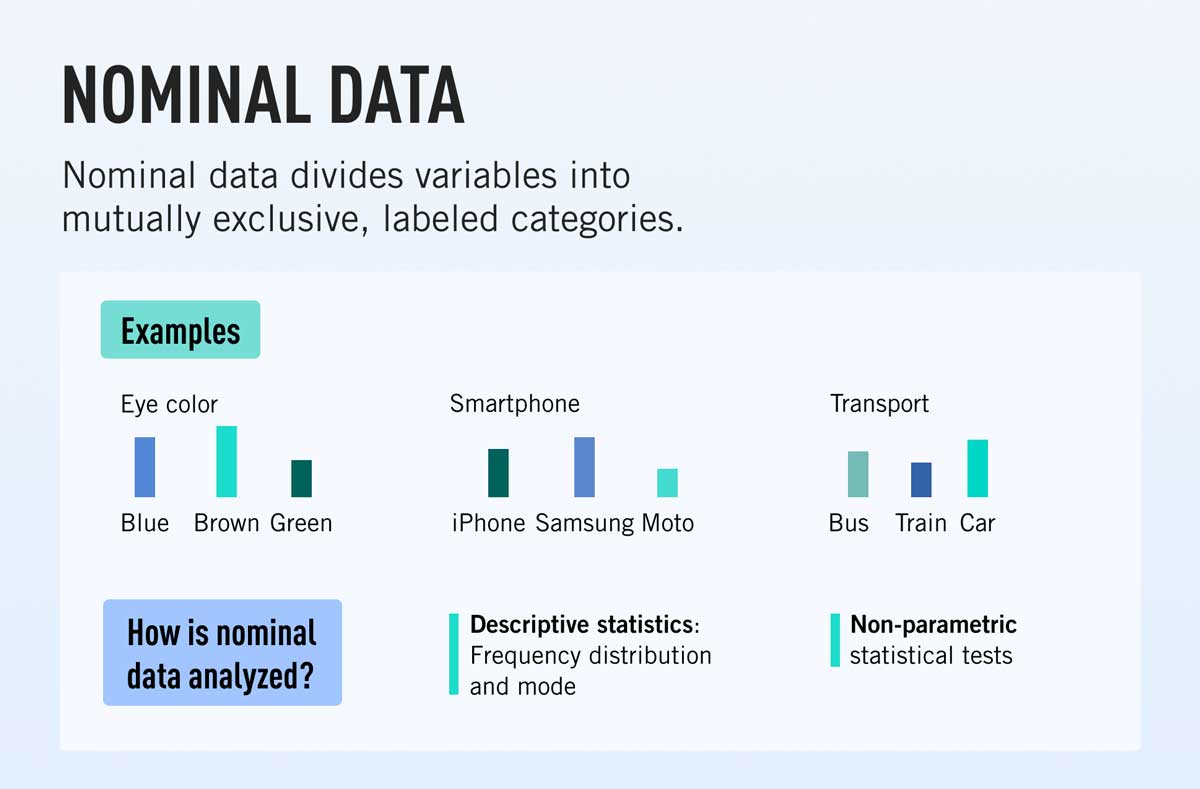

ANOVA can be conducted for different types of data:

- **One-Way ANOVA**: Used when comparing the means of three or more independent groups on one factor. For example, you might compare the academic performance (measured by test scores) of students from three different educational methods.

Steps in One-Way ANOVA:

1. Calculate the **total variance** (the variance of all observations).

2. Break down the total variance into **between-group variance** and **within-group variance**.

3. Compute the **F-ratio**.

4. Compare the F-ratio to a critical value from the F-distribution table, which depends on the number of groups and sample sizes. If the calculated F-ratio is larger than the critical value, the null hypothesis (that all group means are equal) is rejected.

- **Two-Way ANOVA**: Used when there are two independent variables, allowing the researcher to assess not only the main effects of each variable but also the interaction effect between the two variables. For instance, you might examine the effects of both gender and study method on academic performance.

---

#### C. **Multiple Comparison of Means**

After conducting ANOVA, if the null hypothesis is rejected, it indicates that at least one group mean is different, but it doesn’t specify which groups are significantly different. To determine which specific group means differ from each other, **multiple comparison tests** (also called post hoc tests) are used. Common methods include:

- **Tukey’s Honestly Significant Difference (HSD)**: Compares all possible pairs of means to identify which ones are significantly different.

- **Bonferroni Correction**: Adjusts the significance level to account for multiple comparisons, reducing the chance of Type I errors (false positives).

- **Scheffé’s Test**: A more conservative post hoc test, especially useful when comparing all possible contrasts between means, not just pairwise comparisons.

These tests help provide a clearer picture of where the significant differences lie between the groups, beyond simply knowing that differences exist.

---

### **Readings** for this Unit:

1. **Levin and Fox**. (1969). *Analysis of Variance* (Chapter 8, pp. 283-308): This chapter provides an overview of the theory and application of ANOVA, focusing on how to conduct the analysis and interpret the results.

2. **Blalock, H.M.** (1969). *Analysis of Variance* (Chapter 16, pp. 317-360): This reading delves deeper into the mathematical foundation of ANOVA, offering a more comprehensive understanding of the statistical principles involved.

These readings will give you a solid foundation in understanding and applying ANOVA in sociological research, particularly when comparing group means. Let me know if you need further elaboration on any specific point!